When evaluating your websites visitor traffic you are likely to find that a large portion of your hits are generated by webbots. Many of the bots are nice ones and they are indexing your website for inclusion in a variety of online search sites.

When evaluating your websites visitor traffic you are likely to find that a large portion of your hits are generated by webbots. Many of the bots are nice ones and they are indexing your website for inclusion in a variety of online search sites.

Unfortunately there are times when you may need to reduce the amount of traffic generated by bots to improve the experience for humans. You may also need to adjust or even block bots that hit pages on which you implement a third party API.

Twitter has just required the use of an api signup for all aps but there are many other sites that are now enforcing rules to reduce their costs. For instance Amazon will now tighten the number of hits to their affiliate stores. If you have too many hits by bots you may find that real visitors won’t be able to buy things in your store.

When this was announced from Amazon we thought we would do a test reducing the hit rate from Google Bots using the Webmaster Tools website.

Yes we were as skeptical as everyone else… do these bots have minds of their own like a borg collective or can they be controlled by a webmaster.

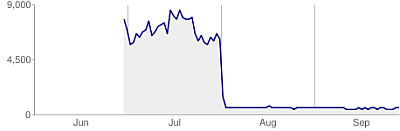

We setup our account well over a year ago mostly to provide sitemap indexes and see what it was all about. This summer we were getting around 9000 hits a day from googlebots and when the Amazon notice came we went in and turned down the hit rate on the bots.

We entered the Webmaster Tools Settings are and using the slider selector reduced the number of hits to one every 30 seconds. This should definitely bring any amazon store hit below the API restriction level.

Our Per Day stats went from Previous 8,613 Average 2,914 Current Low 524

As you can see the chart shows there was a dramatic change in the number of pages read on the site.

So, now for almost two full months we have dropped the hits per day from Google dramatically.

During this time we saw almost no change in the number of real visitors or advertising banner revenue.

Although we did see a fluctuation in the number of Unique visitors our August stats actually tripled from previous months and then this September fell off. This could be due to school vacations ending or bosses coming back from vacation and people not surfing at work…

At this point we are going to restore a portion of the crawl rate but it seems that other influences such as writing more posts, tweeting them and getting backlinks is the best thing you can do to improve your unique visitor count.

The one big thing we took away from this test is whether or not site rank or indexed links are effected and we saw no real change… Google Webmaster Tools will allow you to control their bots if you need to.

It would be nice if other indexes followed their lead but we don’t see it happening anytime soon.